Datadog

Port's Datadog integration allows you to model Datadog resources in Port and ingest data into them.

Overview

This integration allows you to:

- Map and organize your desired Datadog resources and their metadata in Port (see supported resources below).

- Watch for Datadog object changes (create/update/delete) in real-time, and automatically apply the changes to your software catalog.

Supported Resources

*SLO History and Service Metric resources are not collected out of the box. Follow the examples here to configure blueprints and resource mappings.

Setup

Choose one of the following installation methods:

- Hosted by Port

- Real-time (self-hosted)

- Scheduled (CI)

Using this installation option means that the integration will be hosted by Port, with a customizable resync interval to ingest data into Port.

Live event support

Currently, live events are not supported for integrations hosted by Port.

Resyncs will be performed periodically (with a configurable interval), or manually triggered by you via Port's UI.

Therefore, real-time events (including GitOps) will not be ingested into Port immediately.

Support for live events is WIP and will be supported in the near future.

Installation

- OAuth

- Manual installation

This integration supports OAuth2 for quick installation with default settings.

-

Go to the Datadog data source page in your portal.

-

Under

Select your installation method, chooseHosted by Port. -

Click

Connect.

This will prompt you to authorize Port and install the integration with the following default settings:-

Resync interval: Every 1 hour. -

Send raw data examples: Enabled.

See the

Application settingssection below for descriptions of these settings. -

Answers to common questions about OAuth integrations can be found here:

OAuth integrations FAQ (click to expand)

What permissions do I need to install the integration using OAuth2?

Any level of permission will work. However, the data fetched depends on the user’s access level:

- If the user has admin-level access, Port will fetch all project data.

- If the user has restricted access (e.g., only specific projects), Port will fetch data based on those permissions.

Are there any differences in the sync behavior between OAuth2 and custom token-based installation?

Token-Based Installation requires users to manually generate and provide tokens, offering control over permissions but increasing the risk of setup errors.

OAuth2 Installation automates the process, simplifying setup and reducing errors while aligning access levels with the user’s permissions.

Can multiple integrations use the same OAuth connection? Can multiple organizations use the same OAuth connection?

There is no limit to the number of OAuth connections you can create for integrations or organizations.

What is the level of permissions Port requests in the OAuth2 authentication flow, and why?

The exact permissions Port requests will appear when connecting the OAuth provider.

Port requests both read and write access so the secrets can be used later for running self-service actions (e.g., creating Jira tickets).

What happens if my integration shows an authorization error with the 3rd party?

OAuth tokens are refreshed automatically by Port, including before manual syncs.

If you encounter an HTTP 401 unauthorized error, try running a manual resync or wait for the next scheduled sync, and the issue will resolve itself.

If the error persists, please contact our support team.

What happens if I delete an installation of OAuth2?

- Deleting an OAuth2-based installation will not revoke access to the third-party service.

- Port will delete the OAuth secret, which prevents it from utilizing the connection for future syncs.

- If you reinstall the integration, you will need to reconnect OAuth.

- Actions relying on the deleted secret (e.g., creating a Jira ticket) will fail until the secret is recreated or the integration is reinstalled.

To manually configure the installation settings:

-

Toggle on the

Use Custom Settingsswitch. -

Configure the

integration settingsandapplication settingsas you wish (see below for details).

Application settings

Every integration hosted by Port has the following customizable application settings, which are configurable after installation:

-

Resync interval: The frequency at which Port will ingest data from the integration. There are various options available, ranging from every 1 hour to once a day. -

Send raw data examples: A boolean toggle (enabledby default). If enabled, raw data examples will be sent from the integration to Port. These examples are used when testing your mapping configuration, they allow you to run yourjqexpressions against real data and see the results.

Integration settings

Every integration has its own tool-specific settings, under the Integration settings section:

-

Datadog Base Url: For example, https://api.datadoghq.com or https://api.datadoghq.eu. To identify your base URL, see the Datadog documentation. -

Datadog Api Key- To create an API key, see the Datadog documentation. -

Datadog Application Key- To create an application key, see the Datadog documentation. -

Datadog Webhook Token- Optional. This token is used to secure webhook communication between Datadog and Port. To learn more, see the Datadog documentation. -

Datadog Access Token- Optional. This is used to authenticate with Datadog using OAuth2. You should not set this value manually.

You can also hover over the ⓘ icon next each setting to see a description.

Port secrets

Some integration settings require sensitive pieces of data, such as tokens.

For these settings, Port secrets will be used, ensuring that your sensitive data is encrypted and secure.

When filling in such a setting, its value will be obscured (shown as ••••••••).

For each such setting, Port will automatically create a secret in your organization.

To see all secrets in your organization, follow these steps.

Port source IP addresses

When using this installation method, Port will make outbound calls to your 3rd-party applications from static IP addresses.

You may need to add these addresses to your allowlist, in order to allow Port to interact with the integrated service:

- Europe (EU)

- United States (US)

54.73.167.226

63.33.143.237

54.76.185.219

3.234.37.33

54.225.172.136

3.225.234.99

Using this installation option means that the integration will be able to update Port in real time using webhooks.

Prerequisites

To install the integration, you need a Kubernetes cluster that the integration's container chart will be deployed to.

Please make sure that you have kubectl and helm installed on your machine, and that your kubectl CLI is connected to the Kubernetes cluster where you plan to install the integration.

If you are having trouble installing this integration, please refer to these troubleshooting steps.

For details about the available parameters for the installation, see the table below.

- Helm

- ArgoCD

To install the integration using Helm:

-

Go to the datadog data source page in your portal.

-

Select the

Real-time and always onmethod:

-

A

helmcommand will be displayed, with default values already filled out (e.g. your Port cliend ID, client secret, etc).

Copy the command, replace the placeholders with your values, then run it in your terminal to install the integration.

The baseUrl, port_region, port.baseUrl, portBaseUrl, port_base_url and OCEAN__PORT__BASE_URL parameters are used to select which instance or Port API will be used.

Port exposes two API instances, one for the EU region of Port, and one for the US region of Port.

- If you use the EU region of Port (https://app.getport.io), your API URL is

https://api.getport.io. - If you use the US region of Port (https://app.us.getport.io), your API URL is

https://api.us.getport.io.

To install the integration using ArgoCD:

- Create a

values.yamlfile inargocd/my-ocean-datadog-integrationin your git repository with the content:

Remember to replace the placeholder for DATADOG_BASE_URL, DATADOG_API_KEY and DATADOG_APPLICATION_KEY.

initializePortResources: true

scheduledResyncInterval: 60

integration:

identifier: my-ocean-datadog-integration

type: datadog

eventListener:

type: POLLING

config:

datadogBaseUrl: DATADOG_BASE_URL

secrets:

datadogApiKey: DATADOG_API_KEY

datadogApplicationKey: DATADOG_APPLICATION_KEY

- Install the

my-ocean-datadog-integrationArgoCD Application by creating the followingmy-ocean-datadog-integration.yamlmanifest:

Remember to replace the placeholders for YOUR_PORT_CLIENT_ID YOUR_PORT_CLIENT_SECRET and YOUR_GIT_REPO_URL.

Multiple sources ArgoCD documentation can be found here.

ArgoCD Application

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: my-ocean-datadog-integration

namespace: argocd

spec:

destination:

namespace: mmy-ocean-datadog-integration

server: https://kubernetes.default.svc

project: default

sources:

- repoURL: 'https://port-labs.github.io/helm-charts/'

chart: port-ocean

targetRevision: 0.1.14

helm:

valueFiles:

- $values/argocd/my-ocean-datadog-integration/values.yaml

parameters:

- name: port.clientId

value: YOUR_PORT_CLIENT_ID

- name: port.clientSecret

value: YOUR_PORT_CLIENT_SECRET

- name: port.baseUrl

value: https://api.getport.io

- repoURL: YOUR_GIT_REPO_URL

targetRevision: main

ref: values

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=true

The baseUrl, port_region, port.baseUrl, portBaseUrl, port_base_url and OCEAN__PORT__BASE_URL parameters are used to select which instance or Port API will be used.

Port exposes two API instances, one for the EU region of Port, and one for the US region of Port.

- If you use the EU region of Port (https://app.getport.io), your API URL is

https://api.getport.io. - If you use the US region of Port (https://app.us.getport.io), your API URL is

https://api.us.getport.io.

- Apply your application manifest with

kubectl:

kubectl apply -f my-ocean-datadog-integration.yaml

This table summarizes the available parameters for the installation.

| Parameter | Description | Example | Required |

|---|---|---|---|

port.clientId | Your port client id | ✅ | |

port.clientSecret | Your port client secret | ✅ | |

port.baseUrl | Your Port API URL - https://api.getport.io for EU, https://api.us.getport.io for US | ✅ | |

integration.secrets.datadogApiKey | Datadog API key, docs can be found here | ✅ | |

integration.secrets.datadogApplicationKey | Datadog application key, docs can be found here | ✅ | |

integration.config.datadogBaseUrl | The base Datadog host. Defaults to https://api.datadoghq.com. If in EU, use https://api.datadoghq.eu | ✅ | |

integration.secrets.datadogWebhookToken | Datadog webhook token. Learn more | ❌ | |

integration.config.appHost | The host of the Port Ocean app. Used to set up the integration endpoint as the target for webhooks created in Datadog | https://my-ocean-integration.com | ✅ |

integration.eventListener.type | The event listener type. Read more about event listeners | ✅ | |

integration.type | The integration to be installed | ✅ | |

scheduledResyncInterval | The number of minutes between each resync. When not set the integration will resync for each event listener resync event. Read more about scheduledResyncInterval | ❌ | |

initializePortResources | Default true, When set to true the integration will create default blueprints and the port App config Mapping. Read more about initializePortResources | ❌ | |

sendRawDataExamples | Enable sending raw data examples from the third party API to port for testing and managing the integration mapping. Default is true | ❌ |

For advanced configuration such as proxies or self-signed certificates, click here.

This workflow/pipeline will run the Datadog integration once and then exit, this is useful for scheduled ingestion of data.

If you want the integration to update Port in real time using webhooks you should use the Real-time (self-hosted) installation option.

- GitHub

- Jenkins

- Azure Devops

- GitLab

Make sure to configure the following Github Secrets:

| Parameter | Description | Example | Required |

|---|---|---|---|

port_client_id | Your Port client (How to get the credentials) id | ✅ | |

port_client_secret | Your Port client (How to get the credentials) secret | ✅ | |

port_base_url | Your Port API URL - https://api.getport.io for EU, https://api.us.getport.io for US | ✅ | |

config -> datadog_base_url | US: https://api.datadoghq.com EU: https://api.datadoghq.eu | ✅ | |

config -> datadog_api_key | Datadog API key, docs can be found here | ✅ | |

config -> datadog_application_key | Datadog application key, docs can be found here | ✅ | |

config -> datadog_webhook_token | Datadog webhook token. Learn more | ❌ | |

initialize_port_resources | Default true, When set to true the integration will create default blueprints and the port App config Mapping. Read more about initializePortResources | ❌ | |

identifier | The identifier of the integration that will be installed | ❌ | |

version | The version of the integration that will be installed | latest | ❌ |

The following example uses the Ocean Sail Github Action to run the Datadog integration. For further information about the action, please visit the Ocean Sail Github Action

Here is an example for datadog-integration.yml workflow file:

name: Datadog Exporter Workflow

on:

workflow_dispatch:

schedule:

- cron: '0 */1 * * *' # Determines the scheduled interval for this workflow. This example runs every hour.

jobs:

run-integration:

runs-on: ubuntu-latest

timeout-minutes: 30 # Set a time limit for the job

steps:

- uses: port-labs/ocean-sail@v1

with:

type: 'datadog'

port_client_id: ${{ secrets.OCEAN__PORT__CLIENT_ID }}

port_client_secret: ${{ secrets.OCEAN__PORT__CLIENT_SECRET }}

port_base_url: https://api.getport.io

config: |

datadog_base_url: https://api.datadoghq.com

datadog_api_key: ${{ secrets.DATADOG_API_KEY }}

datadog_application_key: ${{ secrets.DATADOG_APP_KEY }}

Your Jenkins agent should be able to run docker commands.

Make sure to configure the following Jenkins Credentials of Secret Text type:

| Parameter | Description | Example | Required |

|---|---|---|---|

OCEAN__INTEGRATION__CONFIG__DATADOG_API_KEY | Datadog API key, docs can be found here | ✅ | |

OCEAN__INTEGRATION__CONFIG__DATADOG_APPLICATION_KEY | Datadog application key, docs can be found here | ✅ | |

OCEAN__INTEGRATION__CONFIG__DATADOG_BASE_URL | The base API url | https://api.datadoghq.com / https://api.datadoghq.eu | ✅ |

OCEAN__INTEGRATION__CONFIG__DATADOG_WEBHOOK_TOKEN | Datadog webhook token. Learn more | ❌ | |

OCEAN__PORT__CLIENT_ID | Your Port client (How to get the credentials) id | ✅ | |

OCEAN__PORT__CLIENT_SECRET | Your Port client (How to get the credentials) secret | ✅ | |

OCEAN__PORT__BASE_URL | Your Port API URL - https://api.getport.io for EU, https://api.us.getport.io for US | ✅ | |

OCEAN__INITIALIZE_PORT_RESOURCES | Default true, When set to true the integration will create default blueprints and the port App config Mapping. Read more about initializePortResources | ❌ | |

OCEAN__INTEGRATION__IDENTIFIER | The identifier of the integration that will be installed | ❌ |

Here is an example for Jenkinsfile groovy pipeline file:

pipeline {

agent any

stages {

stage('Run Datadog Integration') {

steps {

script {

withCredentials([

string(credentialsId: 'OCEAN__INTEGRATION__CONFIG__DATADOG_API_KEY', variable: 'OCEAN__INTEGRATION__CONFIG__DATADOG_API_KEY'),

string(credentialsId: 'OCEAN__INTEGRATION__CONFIG__DATADOG_APPLICATION_KEY', variable: 'OCEAN__INTEGRATION__CONFIG__DATADOG_APPLICATION_KEY'),

string(credentialsId: 'OCEAN__INTEGRATION__CONFIG__DATADOG_BASE_URL', variable: 'OCEAN__INTEGRATION__CONFIG__DATADOG_BASE_URL'),

string(credentialsId: 'OCEAN__PORT__CLIENT_ID', variable: 'OCEAN__PORT__CLIENT_ID'),

string(credentialsId: 'OCEAN__PORT__CLIENT_SECRET', variable: 'OCEAN__PORT__CLIENT_SECRET'),

]) {

sh('''

#Set Docker image and run the container

integration_type="datadog"

version="latest"

image_name="ghcr.io/port-labs/port-ocean-${integration_type}:${version}"

docker run -i --rm --platform=linux/amd64 \

-e OCEAN__EVENT_LISTENER='{"type":"ONCE"}' \

-e OCEAN__INITIALIZE_PORT_RESOURCES=true \

-e OCEAN__INTEGRATION__CONFIG__DATADOG_API_KEY=$OCEAN__INTEGRATION__CONFIG__DATADOG_API_KEY \

-e OCEAN__INTEGRATION__CONFIG__DATADOG_APPLICATION_KEY=$OCEAN__INTEGRATION__CONFIG__DATADOG_APPLICATION_KEY \

-e OCEAN__INTEGRATION__CONFIG__DATADOG_BASE_URL=$OCEAN__INTEGRATION__CONFIG__DATADOG_BASE_URL \

-e OCEAN__PORT__CLIENT_ID=$OCEAN__PORT__CLIENT_ID \

-e OCEAN__PORT__CLIENT_SECRET=$OCEAN__PORT__CLIENT_SECRET \

-e OCEAN__PORT__BASE_URL='https://api.getport.io' \

$image_name

exit $?

''')

}

}

}

}

}

}

Your Azure Devops agent should be able to run docker commands. Learn more about agents here.

Variable groups store values and secrets you'll use in your pipelines across your project. Learn more

Setting Up Your Credentials

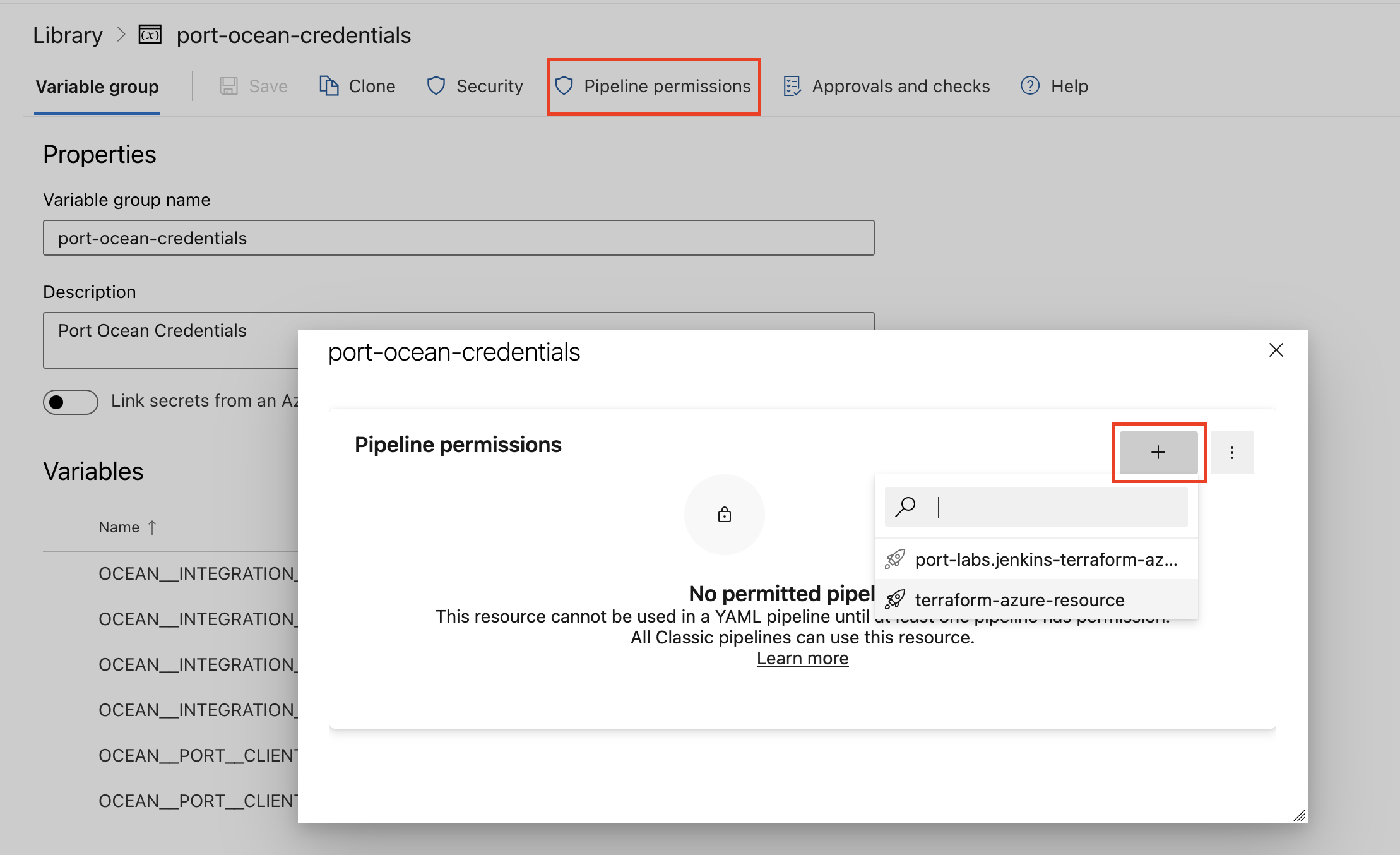

- Create a Variable Group: Name it port-ocean-credentials.

- Store the required variables (see the table below).

- Authorize Your Pipeline:

- Go to "Library" -> "Variable groups."

- Find port-ocean-credentials and click on it.

- Select "Pipeline Permissions" and add your pipeline to the authorized list.

| Parameter | Description | Example | Required |

|---|---|---|---|

OCEAN__INTEGRATION__CONFIG__DATADOG_API_KEY | Datadog API key, docs can be found here | ✅ | |

OCEAN__INTEGRATION__CONFIG__DATADOG_APPLICATION_KEY | Datadog application key, docs can be found here | ✅ | |

OCEAN__INTEGRATION__CONFIG__DATADOG_BASE_URL | The base API url | https://api.datadoghq.com / https://api.datadoghq.eu | ✅ |

OCEAN__INTEGRATION__CONFIG__DATADOG_WEBHOOK_TOKEN | Datadog webhook token. Learn more | ❌ | |

OCEAN__PORT__CLIENT_ID | Your Port client (How to get the credentials) id | ✅ | |

OCEAN__PORT__CLIENT_SECRET | Your Port client (How to get the credentials) secret | ✅ | |

OCEAN__PORT__BASE_URL | Your Port API URL - https://api.getport.io for EU, https://api.us.getport.io for US | ✅ | |

OCEAN__INITIALIZE_PORT_RESOURCES | Default true, When set to true the integration will create default blueprints and the port App config Mapping. Read more about initializePortResources | ❌ | |

OCEAN__INTEGRATION__IDENTIFIER | The identifier of the integration that will be installed | ❌ |

Here is an example for datadog-integration.yml pipeline file:

trigger:

- main

pool:

vmImage: "ubuntu-latest"

variables:

- group: port-ocean-credentials

steps:

- script: |

# Set Docker image and run the container

integration_type="datadog"

version="latest"

image_name="ghcr.io/port-labs/port-ocean-$integration_type:$version"

docker run -i --rm \

-e OCEAN__EVENT_LISTENER='{"type":"ONCE"}' \

-e OCEAN__INITIALIZE_PORT_RESOURCES=true \

-e OCEAN__INTEGRATION__CONFIG__DATADOG_API_KEY=$(OCEAN__INTEGRATION__CONFIG__DATADOG_API_KEY) \

-e OCEAN__INTEGRATION__CONFIG__DATADOG_APPLICATION_KEY=$(OCEAN__INTEGRATION__CONFIG__DATADOG_APPLICATION_KEY) \

-e OCEAN__INTEGRATION__CONFIG__DATADOG_BASE_URL=$(OCEAN__INTEGRATION__CONFIG__DATADOG_BASE_URL) \

-e OCEAN__PORT__CLIENT_ID=$(OCEAN__PORT__CLIENT_ID) \

-e OCEAN__PORT__CLIENT_SECRET=$(OCEAN__PORT__CLIENT_SECRET) \

-e OCEAN__PORT__BASE_URL='https://api.getport.io' \

$image_name

exit $?

displayName: "Ingest Data into Port"

Make sure to configure the following GitLab variables:

| Parameter | Description | Example | Required |

|---|---|---|---|

OCEAN__INTEGRATION__CONFIG__DATADOG_API_KEY | Datadog API key, docs can be found here | ✅ | |

OCEAN__INTEGRATION__CONFIG__DATADOG_APPLICATION_KEY | Datadog application key, docs can be found here | ✅ | |

OCEAN__INTEGRATION__CONFIG__DATADOG_BASE_URL | The base API url | https://api.datadoghq.com / https://api.datadoghq.eu | ✅ |

OCEAN__INTEGRATION__CONFIG__DATADOG_WEBHOOK_TOKEN | Datadog webhook token. Learn more | ❌ | |

OCEAN__PORT__CLIENT_ID | Your Port client (How to get the credentials) id | ✅ | |

OCEAN__PORT__CLIENT_SECRET | Your Port client (How to get the credentials) secret | ✅ | |

OCEAN__PORT__BASE_URL | Your Port API URL - https://api.getport.io for EU, https://api.us.getport.io for US | ✅ | |

OCEAN__INITIALIZE_PORT_RESOURCES | Default true, When set to true the integration will create default blueprints and the port App config Mapping. Read more about initializePortResources | ❌ | |

OCEAN__INTEGRATION__IDENTIFIER | The identifier of the integration that will be installed | ❌ |

Here is an example for .gitlab-ci.yml pipeline file:

default:

image: docker:24.0.5

services:

- docker:24.0.5-dind

before_script:

- docker info

variables:

INTEGRATION_TYPE: datadog

VERSION: latest

stages:

- ingest

ingest_data:

stage: ingest

variables:

IMAGE_NAME: ghcr.io/port-labs/port-ocean-$INTEGRATION_TYPE:$VERSION

script:

- |

docker run -i --rm --platform=linux/amd64 \

-e OCEAN__EVENT_LISTENER='{"type":"ONCE"}' \

-e OCEAN__INITIALIZE_PORT_RESOURCES=true \

-e OCEAN__INTEGRATION__CONFIG__DATADOG_API_KEY=$OCEAN__INTEGRATION__CONFIG__DATADOG_API_KEY \

-e OCEAN__INTEGRATION__CONFIG__DATADOG_APPLICATION_KEY=$OCEAN__INTEGRATION__CONFIG__DATADOG_APPLICATION_KEY \

-e OCEAN__INTEGRATION__CONFIG__DATADOG_BASE_URL=$OCEAN__INTEGRATION__CONFIG__DATADOG_BASE_URL \

-e OCEAN__PORT__CLIENT_ID=$OCEAN__PORT__CLIENT_ID \

-e OCEAN__PORT__CLIENT_SECRET=$OCEAN__PORT__CLIENT_SECRET \

-e OCEAN__PORT__BASE_URL='https://api.getport.io' \

$IMAGE_NAME

rules: # Run only when changes are made to the main branch

- if: '$CI_COMMIT_BRANCH == "main"'

The baseUrl, port_region, port.baseUrl, portBaseUrl, port_base_url and OCEAN__PORT__BASE_URL parameters are used to select which instance or Port API will be used.

Port exposes two API instances, one for the EU region of Port, and one for the US region of Port.

- If you use the EU region of Port (https://app.getport.io), your API URL is

https://api.getport.io. - If you use the US region of Port (https://app.us.getport.io), your API URL is

https://api.us.getport.io.

For advanced configuration such as proxies or self-signed certificates, click here.

Configuration

Port integrations use a YAML mapping block to ingest data from the third-party api into Port.

The mapping makes use of the JQ JSON processor to select, modify, concatenate, transform and perform other operations on existing fields and values from the integration API.

Examples

To view and test the integration's mapping against examples of the third-party API responses, use the jq playground in your data sources page. Find the integration in the list of data sources and click on it to open the playground.

Examples of blueprints and the relevant integration configurations can be found on the datadog examples page

Let's Test It

This section includes a sample response data from Datadog. In addition, it includes the entity created from the resync event based on the Ocean configuration provided in the previous section.

Payload

Here is an example of the payload structure from Datadog:

Monitor response data

{

"id":15173866,

"org_id":1000147697,

"type":"query alert",

"name":"A change @webhook-PORT",

"message":"A change has happened",

"tags":[

"app:webserver"

],

"query":"change(avg(last_5m),last_1h):avg:datadog.agent.running{local} by {version,host} > 40",

"options":{

"thresholds":{

"critical":40.0,

"warning":30.0

},

"notify_audit":false,

"include_tags":true,

"new_group_delay":60,

"notify_no_data":false,

"timeout_h":0,

"silenced":{

}

},

"multi":true,

"created_at":1706707941000,

"created":"2024-01-31T13:32:21.270116+00:00",

"modified":"2024-02-02T16:31:40.516062+00:00",

"deleted":"None"[

"REDACTED"

],

"restricted_roles":"None"[

"REDACTED"

],

"priority":5,

"overall_state_modified":"2024-03-08T20:52:46+00:00",

"overall_state":"No Data",

"creator":{

"name":"John Doe",

"email":"john.doe@gmail.com",

"handle":"john.doe@gmail.com",

"id":1001199545

},

"matching_downtimes":[

]

}

Service response data

{

"type":"service-definition",

"id":"04fbab48-a233-4592-8c53-d1bfe282e6c3",

"attributes":{

"meta":{

"last-modified-time":"2024-05-29T10:31:06.833444245Z",

"github-html-url":"",

"ingestion-source":"api",

"origin":"unknown",

"origin-detail":"",

"warnings":[

{

"keyword-location":"/properties/integrations/properties/opsgenie/properties/service-url/pattern",

"instance-location":"/integrations/opsgenie/service-url",

"message":"does not match pattern '^(https?://)?[a-zA-Z\\\\d_\\\\-.]+\\\\.opsgenie\\\\.com/service/([a-zA-Z\\\\d_\\\\-]+)/?$'"

},

{

"keyword-location":"/properties/integrations/properties/pagerduty/properties/service-url/pattern",

"instance-location":"/integrations/pagerduty/service-url",

"message":"does not match pattern '^(https?://)?[a-zA-Z\\\\d_\\\\-.]+\\\\.pagerduty\\\\.com/service-directory/(P[a-zA-Z\\\\d_\\\\-]+)/?$'"

}

],

"ingested-schema-version":"v2.1"

},

"schema":{

"schema-version":"v2.2",

"dd-service":"inventory-management",

"team":"Inventory Management Team",

"application":"Inventory System",

"tier":"Tier 1",

"description":"Service for managing product inventory and stock levels.",

"lifecycle":"production",

"contacts":[

{

"name":"Inventory Team",

"type":"email",

"contact":"inventory-team@example.com"

},

{

"name":"Warehouse Support",

"type":"email",

"contact":"warehouse-support@example.com"

}

],

"links":[

{

"name":"Repository",

"type":"repo",

"provider":"GitHub",

"url":"https://github.com/example/inventory-service"

},

{

"name":"Runbook",

"type":"runbook",

"provider":"Confluence",

"url":"https://wiki.example.com/runbooks/inventory-service"

}

],

"tags":[

"inventory",

"stock"

],

"integrations":{

"pagerduty":{

"service-url":"https://pagerduty.com/services/inventory"

},

"opsgenie":{

"service-url":"https://opsgenie.com/services/inventory",

"region":"US"

}

},

"extensions":{

"qui_6":{

}

}

}

}

}

}

SLO response data

{

"id":"b6869ae6189d59baa421feb8b437fe9e",

"name":"Availability SLO for shopping-cart service",

"tags":[

"service:shopping-cart",

"env:none"

],

"monitor_tags":[

],

"thresholds":[

{

"timeframe":"7d",

"target":99.9,

"target_display":"99.9"

}

],

"type":"monitor",

"type_id":0,

"description":"This SLO tracks the availability of the shopping-cart service. Availability is measured as the number of successful requests divided by the number of total requests for the service",

"timeframe":"7d",

"target_threshold":99.9,

"monitor_ids":[

15173866,

15216083,

15254771

],

"creator":{

"name":"John Doe",

"handle":"john.doe@gmail.com",

"email":"john.doe@gmail.com"

},

"created_at":1707215619,

"modified_at":1707215619

}

SLO history response data

{

"thresholds": {

"7d": {

"timeframe": "7d",

"target": 99,

"target_display": "99."

}

},

"from_ts": 1719254776,

"to_ts": 1719859576,

"type": "monitor",

"type_id": 0,

"slo": {

"id": "5ec82408e83c54b4b5b2574ee428a26c",

"name": "Host {{host.name}} with IP {{host.ip}} is not having enough memory",

"tags": [

"p69hx03",

"pages-laptop"

],

"monitor_tags": [],

"thresholds": [

{

"timeframe": "7d",

"target": 99,

"target_display": "99."

}

],

"type": "monitor",

"type_id": 0,

"description": "Testing SLOs from DataDog",

"timeframe": "7d",

"target_threshold": 99,

"monitor_ids": [

147793

],

"creator": {

"name": "John Doe",

"handle": "janesmith@gmail.com",

"email": "janesmith@gmail.com"

},

"created_at": 1683878238,

"modified_at": 1684773765

},

"overall": {

"name": "Host {{host.name}} with IP {{host.ip}} is not having enough memory",

"preview": false,

"monitor_type": "query alert",

"monitor_modified": 1683815332,

"errors": null,

"span_precision": 2,

"history": [

[

1714596313,

1

]

],

"uptime": 3,

"sli_value": 10,

"precision": {

"custom": 2,

"7d": 2

},

"corrections": [],

"state": "breached"

}

}

Service metric response data

The Datadog response is enriched with a variety of metadata fields, including:

__service: The name or identifier of the service generating the data.__query_id: A unique identifier for the query that generated the data.__query: The original query used to retrieve the data.__env: The environment associated with the data (e.g., production, staging).

This enrichment significantly enhances the usability of the Datadog response by providing valuable context and facilitating easier analysis and troubleshooting.

{

"status": "ok",

"res_type": "time_series",

"resp_version": 1,

"query": "avg:system.mem.used{service:inventory-management,env:staging}",

"from_date": 1723796537000,

"to_date": 1723797137000,

"series": [

{

"unit": [

{

"family": "bytes",

"id": 2,

"name": "byte",

"short_name": "B",

"plural": "bytes",

"scale_factor": 1.0

}

],

"query_index": 0,

"aggr": "avg",

"metric": "system.mem.used",

"tag_set": [],

"expression": "avg:system.mem.used{env:staging,service:inventory-management}",

"scope": "env:staging,service:inventory-management",

"interval": 2,

"length": 39,

"start": 1723796546000,

"end": 1723797117000,

"pointlist": [

[1723796546000.0, 528986112.0],

[1723796562000.0, 531886080.0],

[1723796576000.0, 528867328.0],

[1723796592000.0, 522272768.0],

[1723796606000.0, 533704704.0],

[1723796846000.0, 533028864.0],

[1723796862000.0, 527417344.0],

[1723796876000.0, 531513344.0],

[1723796892000.0, 533577728.0],

[1723796906000.0, 533471232.0],

[1723796922000.0, 528125952.0],

[1723796936000.0, 530542592.0],

[1723796952000.0, 530767872.0],

[1723796966000.0, 526966784.0],

[1723796982000.0, 528560128.0],

[1723796996000.0, 530792448.0],

[1723797012000.0, 527384576.0],

[1723797026000.0, 529534976.0],

[1723797042000.0, 521650176.0],

[1723797056000.0, 531001344.0],

[1723797072000.0, 525955072.0],

[1723797086000.0, 529469440.0],

[1723797102000.0, 532279296.0],

[1723797116000.0, 526979072.0]

],

"display_name": "system.mem.used",

"attributes": {}

}

],

"values": [],

"times": [],

"message": "",

"group_by": [],

"__service": "inventory-management",

"__query_id": "avg:system.mem.used/service:inventory-management/env:staging",

"__query": "avg:system.mem.used",

"__env": "staging"

}

User response data

{

"type": "users",

"id": "468a7e28-4d70-11ef-9b68-c2d109cdf094",

"attributes": {

"name": "",

"handle": "john.doe@example.com",

"created_at": "2024-07-29T06:03:28.693227+00:00",

"modified_at": "2024-07-29T06:05:30.392353+00:00",

"email": "john.doe@example.com",

"icon": "<https://secure.gravatar.com/avatar/04659c42251ec0dacaa5eb88507e3016?s=48&d=retro>",

"title": "",

"verified": true,

"service_account": false,

"disabled": false,

"allowed_login_methods": [],

"status": "Active",

"mfa_enabled": false

},

"relationships": {

"roles": {

"data": [

{

"type": "roles",

"id": "dbc3577a-396e-11ef-b12b-da7ad0900002"

}

]

},

"org": {

"data": {

"type": "orgs",

"id": "dbb758e9-396e-11ef-800c-bea43249b5f6"

}

}

}

}

Team response data

{

"type": "team",

"attributes": {

"description": "",

"modified_at": "2024-12-09T08:44:00.526954+00:00",

"is_managed": false,

"link_count": 0,

"user_count": 3,

"name": "Dummy",

"created_at": "2024-12-09T08:44:00.526949+00:00",

"handle": "Dummy",

"summary": null

},

"relationships": {

"user_team_permissions": {

"links": {

"related": "/api/v2/team/b7c4d123-a981-4b75-cd5b-86e246513f3c/permission-settings"

}

},

"team_links": {

"links": {

"related": "/api/v2/team/b7c4d123-a981-4b75-cd5b-86e246513f3c/links"

}

}

},

"id": "b7c4d123-a981-4b75-cd5b-86e246513f3c",

"__members": [

{

"type": "users",

"id": "579e8a70-4d70-11ef-b68c-f276c989c0b2",

"attributes": {

"name": "John Doe",

"handle": "john.doe@example.com",

"email": "john.doe@example.com",

"icon": "<https://secure.gravatar.com/avatar/c6ad65005b22c404c949f5ad826e8fc4?s=48&d=retro>",

"disabled": false,

"service_account": false

}

}

]

}

Mapping Result

The combination of the sample payload and the Ocean configuration generates the following Port entity:

Monitor entity in Port

{

"identifier": "15173866",

"title": "A change @webhook-PORT",

"icon": "Datadog",

"blueprint": "datadogMonitor",

"team": [],

"properties": {

"tags": [

"app:webserver"

],

"overallState": "No Data",

"priority": "5",

"createdAt": "2024-01-31T13:32:21.270116+00:00",

"updatedAt": "2024-02-02T16:31:40.516062+00:00",

"createdBy": "john.doe@gmail.com",

"monitorType": "query alert"

},

"relations": {},

"createdAt": "2024-05-29T09:43:34.750Z",

"createdBy": "<port-client-id>",

"updatedAt": "2024-05-29T09:43:34.750Z",

"updatedBy": "<port-client-id>"

}

Service entity in Port

{

"identifier": "inventory-management",

"title": "inventory-management",

"icon": "Datadog",

"blueprint": "datadogService",

"team": [],

"properties": {

"owners": [

"inventory-team@example.com",

"warehouse-support@example.com"

],

"links": [

"https://github.com/example/inventory-service",

"https://wiki.example.com/runbooks/inventory-service"

],

"description": "Service for managing product inventory and stock levels.",

"tags": [

"inventory",

"stock"

],

"application": "Inventory System"

},

"relations": {},

"createdAt": "2024-05-29T10:31:44.283Z",

"createdBy": "<port-client-id>",

"updatedAt": "2024-05-29T10:31:44.283Z",

"updatedBy": "<port-client-id>"

}

SLO entity in Port

{

"identifier": "b6869ae6189d59baa421feb8b437fe9e",

"title": "Availability SLO for shopping-cart service",

"icon": "Datadog",

"blueprint": "datadogSlo",

"team": [],

"properties": {

"description": "This SLO tracks the availability of the shopping-cart service. Availability is measured as the number of successful requests divided by the number of total requests for the service",

"updatedAt": "2024-02-06T10:33:39Z",

"createdBy": "ahosea15@gmail.com",

"sloType": "monitor",

"targetThreshold": "99.9",

"tags": [

"service:shopping-cart",

"env:none"

],

"createdAt": "2024-02-06T10:33:39Z"

},

"relations": {

"monitors": [

"15173866",

"15216083",

"15254771"

],

"services": [

"shopping-cart"

]

},

"createdAt": "2024-05-29T09:43:51.946Z",

"createdBy": "<port-client-id>",

"updatedAt": "2024-05-29T12:02:01.559Z",

"updatedBy": "<port-client-id>"

}

SLO history entity in Port

{

"identifier": "5ec82408e83c54b4b5b2574ee428a26c",

"title": "Host {{host.name}} with IP {{host.ip}} is not having enough memory",

"icon": "Datadog",

"blueprint": "datadogSloHistory",

"team": [],

"properties": {

"sampling_end_date": "2024-07-01T18:46:16Z",

"sliValue": 10,

"sampling_start_date": "2024-06-24T18:46:16Z"

},

"relations": {

"slo": "5ec82408e83c54b4b5b2574ee428a26c"

},

"createdAt": "2024-07-01T09:43:51.946Z",

"createdBy": "<port-client-id>",

"updatedAt": "2024-07-01T12:02:01.559Z",

"updatedBy": "<port-client-id>"

}

Service metric entity in Port

{

"identifier": "avg:system.disk.used/service:inventory-management/env:prod",

"title": "avg:system.disk.used{service:inventory-management,env:prod}",

"icon": null,

"blueprint": "datadogServiceMetric",

"team": [],

"properties": {

"query": "avg:system.disk.used",

"series": [],

"res_type": "time_series",

"from_date": "2024-08-16T07:32:00Z",

"to_date": "2024-08-16T08:02:00Z",

"env": "prod"

},

"relations": {

"service": "inventory-management"

},

"createdAt": "2024-08-15T15:54:36.638Z",

"createdBy": "<port-client-id>",

"updatedAt": "2024-08-16T08:02:02.399Z",

"updatedBy": "<port-client-id>"

}

User entity in Port

{

"identifier": "1e675d18-b002-11ef-9df4-2a2722803530",

"title": "Matan Grady",

"team": [],

"properties": {

"email": "john.doe@example.com",

"handle": "john.doe@example.com",

"status": "Active",

"disabled": false,

"verified": true

},

"relations": {

"team": []

},

"icon": "Datadog"

}

Team entity in Port

{

"identifier": "b7c4d123-a981-4b75-cd5b-86e246513f3c",

"title": "Dummy",

"team": [],

"properties": {

"description": "",

"handle": "Dummy",

"userCount": 3

},

"relations": {

"members": [

"579e8a70-4d70-11ef-b68c-f276c989c0b2"

]

},

"icon": "Datadog"

}

Relevant Guides

For relevant guides and examples, see the guides section.

Alternative installation via webhook

While the Ocean integration described above is the recommended installation method, you may prefer to use a webhook to ingest alerts and monitor data from Datadog. If so, use the following instructions:

Note that when using this method, data will be ingested into Port only when the webhook is triggered.

Webhook installation (click to expand)

Port configuration

Create the following blueprint definitions:

Datadog microservice blueprint

{

"identifier": "microservice",

"title": "Microservice",

"icon": "Service",

"schema": {

"properties": {

"description": {

"title": "Description",

"type": "string"

}

},

"required": []

},

"mirrorProperties": {},

"calculationProperties": {},

"relations": {}

}

Datadog alert/monitor blueprint

{

"identifier": "datadogAlert",

"description": "This blueprint represents a Datadog monitor/alert in our software catalog",

"title": "Datadog Alert",

"icon": "Datadog",

"schema": {

"properties": {

"url": {

"type": "string",

"format": "url",

"title": "Event URL"

},

"message": {

"type": "string",

"title": "Details"

},

"eventType": {

"type": "string",

"title": "Event Type"

},

"priority": {

"type": "string",

"title": "Metric Priority"

},

"creator": {

"type": "string",

"title": "Creator"

},

"alertMetric": {

"type": "string",

"title": "Alert Metric"

},

"alertType": {

"type": "string",

"title": "Alert Type",

"enum": ["error", "warning", "success", "info"]

},

"tags": {

"type": "array",

"items": {

"type": "string"

},

"title": "Tags"

}

},

"required": []

},

"mirrorProperties": {},

"calculationProperties": {},

"relations": {

"microservice": {

"title": "Services",

"target": "microservice",

"required": false,

"many": false

}

}

}

Create the following webhook configuration using Port UI:

Datadog webhook configuration

-

Basic details tab - fill the following details:

- Title :

Datadog Alert Mapper; - Identifier :

datadog_alert_mapper; - Description :

A webhook configuration for alerts/monitors events from Datadog; - Icon :

Datadog;

- Title :

-

Integration configuration tab - fill the following JQ mapping:

[

{

"blueprint": "datadogAlert",

"entity": {

"identifier": ".body.alert_id | tostring",

"title": ".body.title",

"properties": {

"url": ".body.event_url",

"message": ".body.message",

"eventType": ".body.event_type",

"priority": ".body.priority",

"creator": ".body.creator",

"alertMetric": ".body.alert_metric",

"alertType": ".body.alert_type",

"tags": ".body.tags | split(\", \")"

},

"relations": {

"microservice": ".body.service"

}

}

}

] -

Click Save at the bottom of the page.

The webhook configuration's relation mapping will function properly only when the identifiers of the Port microservice entities match the names of the services or hosts in your Datadog.

Create a webhook in Datadog

- Log in to Datadog with your credentials.

- Click on Integrations at the left sidebar of the page.

- Search for Webhooks in the search box and select it.

- Go to the Configuration tab and follow the installation instructions.

- Click on New.

- Input the following details:

-

Name- use a meaningful name such as Port_Webhook. -

URL- enter the value of theurlkey you received after creating the webhook configuration. -

Payload- When an alert is triggered on your monitors, this payload will be sent to the webhook URL. You can enter this JSON placeholder in the textbox:{

"id": "$ID",

"message": "$TEXT_ONLY_MSG",

"priority": "$PRIORITY",

"last_updated": "$LAST_UPDATED",

"event_type": "$EVENT_TYPE",

"event_url": "$LINK",

"service": "$HOSTNAME",

"creator": "$USER",

"title": "$EVENT_TITLE",

"date": "$DATE",

"org_id": "$ORG_ID",

"org_name": "$ORG_NAME",

"alert_id": "$ALERT_ID",

"alert_metric": "$ALERT_METRIC",

"alert_status": "$ALERT_STATUS",

"alert_title": "$ALERT_TITLE",

"alert_type": "$ALERT_TYPE",

"tags": "$TAGS"

} -

Custom Headers- configure any custom HTTP header to be added to the webhook event. The format for the header should be in JSON.

-

- Click Save at the bottom of the page.

To view the different payloads and structure of the events in Datadog webhooks, look here.

Done! Any problem detected on your Datadog instance will trigger a webhook event. Port will parse the events according to the mapping and update the catalog entities accordingly.

Let's Test It

This section includes a sample response data from Datadog. In addition, it includes the entity created from the resync event based on the Ocean configuration provided in the previous section.

Payload

Here is an example of the payload structure from Datadog:

Webhook response data (Click to expand)

{

"id": "1234567890",

"message": "This is a test message",

"priority": "normal",

"last_updated": "2022-01-01T00:00:00+00:00",

"event_type": "triggered",

"event_url": "https://app.datadoghq.com/event/jump_to?event_id=1234567890",

"service": "my-service",

"creator": "rudy",

"title": "[Triggered] [Memory Alert]",

"date": "1406662672000",

"org_id": "123456",

"org_name": "my-org",

"alert_id": "1234567890",

"alert_metric": "system.load.1",

"alert_status": "system.load.1 over host:my-host was > 0 at least once during the last 1m",

"alert_title": "[Triggered on {host:ip-012345}] Host is Down",

"alert_type": "error",

"tags": "monitor, name:myService, role:computing-node"

}

Mapping Result

The combination of the sample payload and the Ocean configuration generates the following Port entity:

Alert entity in Port (Click to expand)

{

"identifier": "1234567890",

"title": "[Triggered] [Memory Alert]",

"blueprint": "datadogAlert",

"team": [],

"icon": "Datadog",

"properties": {

"url": "https://app.datadoghq.com/event/jump_to?event_id=1234567890",

"message": "This is a test message",

"eventType": "triggered",

"priority": "normal",

"creator": "rudy",

"alertMetric": "system.load.1",

"alertType": "error",

"tags": "monitor, name:myService, role:computing-node"

},

"relations": {

"microservice": "my-service"

},

"createdAt": "2024-2-6T09:30:57.924Z",

"createdBy": "hBx3VFZjqgLPEoQLp7POx5XaoB0cgsxW",

"updatedAt": "2024-2-6T11:49:20.881Z",

"updatedBy": "hBx3VFZjqgLPEoQLp7POx5XaoB0cgsxW"

}

Ingest service level objectives (SLOs)

This guide will walk you through the steps to ingest Datadog SLOs into Port. By following these steps, you will be able to create a blueprint for a microservice entity in Port, representing a service in your Datadog account. Furthermore, you will establish a relation between this service and the datadogSLO blueprint, allowing the ingestion of all defined SLOs from your Datadog account.

The provided example demonstrates how to pull data from Datadog's REST API at scheduled intervals using GitLab Pipelines and report the data to Port.

Ingest service dependency from your APM

In this example, you will create a service blueprint that ingests all services and their related dependencies in your Datadog APM using REST API. You will then add some shell script to create new entities in Port every time GitLab CI is triggered by a schedule.

Ingest service catalog

In this example, you will create a datadogServiceCatalog blueprint that ingests all service catalogs from your Datadog account. You will then add some python script to make API calls to Datadog REST API and fetch data for your account.